SUNBURST SolarWinds BackDoor : Crime Scene Forensics Part 2 (continued)

First, let me be clear that I have no insider knowledge. This is my best guess at what occurred, based on publicly available information

If you’ve arrived to this post, I’d suggest reading the prior post to gain context.

As details are still emerging, let’s speculatively examine the attacker’s post entry-point activity and reconnaissance inside SolarWind’s software supply chain.

Tomislav Peričin, founder of ReversingLabs conducted a deep forensics investigation thats well worth reading.

In summary (excerpts from Tomislav excellent post-mortem), he concludes that

- The attacker infiltrated the source code management system in October 2019 and tampered with version 2019.4.5200.8890 containing a benign code snippet that over a certain period of time chains to construct the backdoor eventually

- After infiltrating via a certain entry-point (yet to be confirmed if it was Office 365 or the exposed FTP credentials via GitHub), the attacker laterally moved into the source code management system, managed to successfully blend into the software development and build process of SolarWinds over a certain period of time (> 1 year)

- This first code modification (albeit benign) was clearly just a proof of concept. Their multi step action plan was as follows :

– Blend into the software development process (situational awareness),

– Inject benign code snippets as patches (eventually adding up to the backdoor across multiple check-ins and patches),

– Verify if patches/commits goes undetected (recon on peer code reviews, security analysis, audits, etc)

– Compromise the build system (recon on build operations integrity)

– Verify that their signed packages are going to appear on the client side as expected (determine supply chain reachability) - The source code of the affected library was directly modified to include malicious backdoor code, which was compiled, signed and delivered through the existing software patch release management system.

Once these objectives were met, and the attackers proved to themselves that the supply chain could be compromised, they started planning the real attack payload.

Italy Cohen, a malware researcher at CheckPoint also shared his perspective as well (click thread to read details)

If we’ve collectively arrived to this section of the post, we should expect that other software development companies (ours included) can also become a target or potentially already be compromised.

Before you proceed to shore up your defenses in a hurry, please take a moment to answer the following:

- Is your software development process (SDLC) impenetrable?

- What is the efficacy of your peer code review amongst your engineering staff?

- Is your CI/CD engine (build/release) and release process impenetrable?

- Do you conduct regular static code analysis, reverse engineering and dynamic analysis as a part of your software development lifecycle?

- If you do, are the results produced by these tools triaged or dropped on the floor?

- Can your standard run-of-mill OWASP based static analysis tools or code grep’ing modules detect a multi-step benign code checkin that can build up toward an eventual backdoor?

How did the attacker blend in and masquerade as a SolarWinds developer?

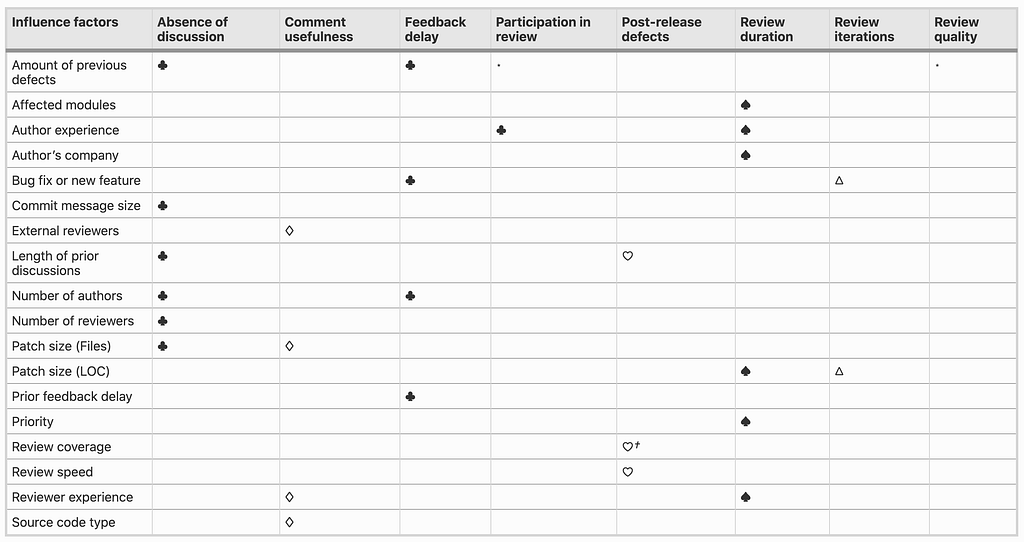

Peer Code review is a potential means of improving software quality and security. To be effective, it depends on several factors.

To this purpose, a good indicator is the concentration of information in individual developers. This is also popularly known as the bus/truck factor: “number of key developers who would need to be hit by a bus (incapacitated), to send the project into such disarray that it would not be able to proceed”. Alternative names for the bus factor are pony factor or truck factor.

Assessing the truck factor is a good starting point as it would have served as an indicator of risk when the attacker began checking-in code across versions into SolarWinds Orion code repository.

It is thus critical to calculate truck factor information for any patch, file, directory, branch and file extension in the Git repository, highlighting the key developers for each of them and for the project itself.

Some of the critical factors (row wise), in correlation to analyzed outcomes (column wise) is depicted in the table below.

Credits : https://dl.acm.org/doi/10.1145/2884781.2884852

Building a model based on these attributes and analyzing the truck factor for every build in your CI pipeline can help detect any malicious activity initiated by a blended adversary.

Observing the malicious observer

Attackers do not wish to have their software analyzed or reverse engineered. Developers of benign software may wish to hide algorithms or design details that may be stolen by competing vendors, while malware developers may wish to hinder or slow down analysis, or to avoid detection altogether. Anti-analysis techniques cover any changes or additions that malware developers add to their software that do not change its functionality, but increases the work required to analyze the software. Time is often limited when handling an incident, and analyzing a trojan/worm/malware may provide essential information if done quickly.

As Italy Cohen illustrates here,

These checks might indicate that the attackers not only deeply learned the source code of #SolarWinds, but also learned the topology of their networks and internal development domain names to minimize the risk that a vigilant employee will notice the anomaly .

Their efforts to stay undetected are impressive. They carefully tested the feasibility of the attack by first deploying a backdoor without malicious capabilities, wrote their code with #SolarWinds coding style, and avoided the infection of the company’s internal networks.

Subverting Static Analysis defenses

Most anti-analysis techniques targeting static analysis use some form of code obfuscation, in an attempt to hide what the code does (FNV-1a hashes embedded in compromised #SUNBURST code base) . Changes are made to the code, before or after compilation, to mask its intent, increase the amount of work required to interpret the code, or alter the code signature, without changing the behavior of the code.

Some of the common obfuscation techniques employed by malware developers to subvert static analysis are

- Code substitution : change on or more instructions to different ones that produce the same result.

- Register reassignment : change which registers values are stored in.

- Code transposition : reorder code by adding or removing unnecessary jumps between instructions. The order of execution remains the same, but the order in memory has been changed by dividing the instructions into random, non-overlapping sequences, reordering them, and inserting jumps to the next sequence at the end of each one.

- Inlining / outlining (control flow alteration): inlining is the process of removing function calls by inserting the functions code into the callers code where the original call was made. Outlining is the opposite, where random bits of code is removed, made into a function, and a call to the function is inserted where the original code was. Both of these alters the control flow, while producing the same result.

- Indirect jumps: target address of a jump is determined at runtime, usually by jumping to a value stored in a register. A normal jump can be converted to an indirect jump by first storing the jump target in a registry, and jumping to the value of the registry. Simple techniques like this are de-obfuscated by a human analyst, but can often fool automatic analysis.

- Packing: the code of the malware is compressed (and often also encrypted), and wrapped in another executable. The other executable contains a decryption and decompression function that unpacks the original malware, maps it into executable memory, and executes it. Packing prevents static analysis, as the malware cannot be analyzed without unpacking it first.

Subverting Dynamic Analysis defenses

Dynamic analysis involves executing the malware and record/observe changes to the computer or network. To counter dynamic analysis, malware developers have implemented checks in their malware that can determine if the malware is being dynamically analyzed. If the malware determines that it is being analyzed or executed in an unexpected, secure environment (sandbox), it stop itself to not reveal its intended actions. Some samples also go as far as attempting to implode the executable binary on disk when they detect a debugger or unexpected environment before terminating.

Techniques targeting dynamic analysis can be divided into two categories: debugger detection (or anti-debugging) and environment detection (or anti-virtual machine)

- Debugger detection is often referred to as anti-debugging, these techniques attempt to detect if the malware is being debugged during execution. Debuggers can inspect the internal state of a program, and manipulate it during execution by modifying code or values in registries or memory. Malware analysts often use debuggers to determine the functionality malware samples, as the sample may not present all its functionality during normal execution.

- Environment detection involve attempting to determine the environment that the malware is being executed in. If the malware is able to determine that it is being executed in a secure environment, it can change its functionality or terminate. Usually, dynamic analysis is conducted in virtual machines. Therefore, a lot of malware focus on detecting artifacts of virtual machines

Advanced Control Flow and Data Flow Analysis to uncover backdoors

This method can employ the control flow graphs and data flow graphs of malware, further converting the graphs into feature vectors using various techniques, and uses the feature vectors for machine learning based classification.

- The first step is performing initial analysis of the program to determine function and section boundaries (ENTRY points, TRANSFORMATIONS, and EXIT points). This results in either a single graph or many sub-graphs representing a collection of inter and intra procedural representations.

- The second step involves removal any information that is not relevant to classification. By removing sub graphs belonging to libraries not relevant to codebase (remove nodes/edges from graph based on fixed criteria)

- This part coverts the control flow graph into a feature vector that can be used for classification. The nodes in the graph may contain function name, assembly instructions or memory addresses. Using instructions or function names directly suffers from a disadvantage.

Common obfuscation techniques like changing an instruction into a different one that produces the same result or reordering the instructions will greatly reduce similarities in the feature vectors. To make the step resistant to obfuscation techniques, we can use graph coloring techniques where each node in the graph is assigned a color with a bitwise representation. Bits are set based on what instructions are represented in the node. By grouping similar instructions, we counter obfuscation base on replacing instructions with similar once. Attributes of edges may be what type of jump they represent, or whether it was a far jump or not. The origin and destination may be included as pairs of nodes, or simply as the order that in which the nodes are added to the feature vector - The subsequent step involves classification used as a metric to determine the performance of the trained classifier and to compare the performance of different classifiers. Some of the recommended classification techniques are

– Random Forest : a well researched method that is considered to be on par with state-of-the-art algorithms with regards to classification accuracy and generalization. The main drawback is that the model may consist of hundreds of trees, and interpreting how a decision was formed between them is a difficult task.

– Naive Bayes : a probabilistic classifier that assumes the conditional independence of features with regards to class. The main drawback is of the assumption that features are conditionally independent does not always hold true in real world scenarios.

– Support Vector Machines : are supervised learning methods used for both classification and regression. A SVM model is trained by determining the optimal hyperplane for separating samples of two different classes. The optimal hyperplane is defined by the samples that are nearest to it, referred to as support vectors, and also is defined as the separating hyperplane that is the furthest away from all the support vectors.

– Multi-layered perceptron (Artificial Neural Networks) : Models are built using neurons, separated into layers, which interacts with each other using weighed connections. Each neuron in one layer is connected to every neuron in the previous and the next layer. The first layer is the input layer, the final layer is the output layer, and the one-to-many middle layers are the hidden layers.

References

We at ShiftLeft are happy to discuss our viewpoints and offer solutions towards reducing organizational software supply chain risks.

SUNBURST SolarWinds BackDoor : Crime Scene Forensics Part 2 (continued) was originally published in ShiftLeft Blog on Medium, where people are continuing the conversation by highlighting and responding to this story.

*** This is a Security Bloggers Network syndicated blog from ShiftLeft Blog - Medium authored by Chetan Conikee. Read the original post at: https://blog.shiftleft.io/sunburst-solarwinds-backdoor-crime-scene-forensics-part-2-continued-3bcd8361f055?source=rss----86a4f941c7da---4

"Scene" - Google News

December 26, 2020 at 10:29AM

https://ift.tt/3pqePw0

SUNBURST SolarWinds BackDoor : Crime Scene Forensics Part 2 (continued) - Security Boulevard

"Scene" - Google News

https://ift.tt/36mRPVq

Shoes Man Tutorial

Pos News Update

Meme Update

Korean Entertainment News

Japan News Update

No comments:

Post a Comment